Mapping Life – Quality Assessment of Novice vs. Expert Georeferencers

-- Contributed by Elizabeth R. Ellwood, Florida State University, with Henry L. Bart, Jr., Michael H. Doosey, Dean K. Jue, Justin G. Mann, Gil Nelson, Nelson Rios, Austin R. Mast

Citizen scientists participate in a host of activities that advance scientific research. These individuals are not trained scientists, but their contributions to research enable scientists to scale up their research across taxa and geographies. Global efforts to digitize natural history collections have included citizen scientists in curatorial work, imaging, and perhaps most extensively in the transcription of specimen label information. It is less common, however, for citizen scientists to assist in georeferencing specimen collection locality information. In part, this is because there is no online citizen science georeferencing platform. Instead, most projects employ technicians and interns in-house on a project-by-project basis. However, it is also because little is known about how effective citizen scientists are at this task.

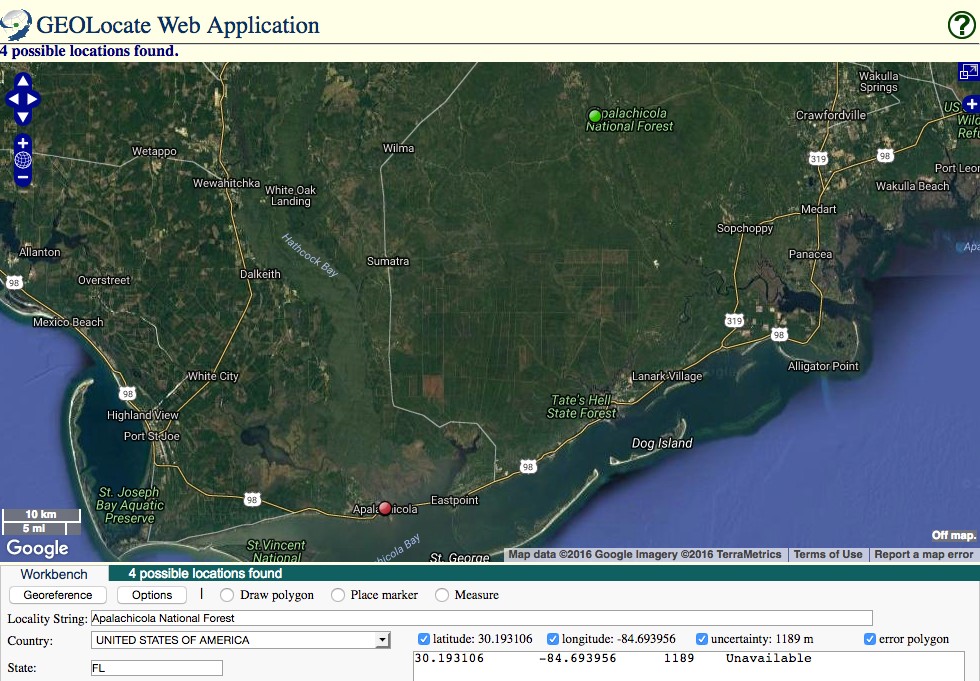

Georeferencing involves reading the locality information on a specimen label and pinpointing that location on a map. For those of you who are familiar with specimen labels, you’ll know that this isn’t always as straightforward as one would like (Figure 1). So, can a volunteer accurately and precisely determine a specimen’s collection locality based on, at best, a couple of sentences on a label? In this research project, we set out to determine just that – how accurate are citizen scientists at georeferencing? Are they as accurate as a georeferencing algorithm or paid technicians? In our research, the citizen scientists we worked with were students at Florida State University (FSU) and Tulane University. The FSU students georeferenced plant specimens from the nearby Apalachicola National Forest, and the Tulane students georeferenced fish specimens from around the US. Both groups used GEOLocate software, which presents users with potential localities based on a submitted text string (Figure 2). The GEOLocate algorithm provides a “best guess” locality, and this point was used in our comparative analysis. Students were presented the locality information as text, and were asked to determine the latitude and longitude using GEOLocate and its various features such as habitat and political map layers and zoom and pan functionality. The students recorded the latitude and longitude and these values were compared to those produced by the GEOLocate algorithm and the experts. At FSU, each of 270 localities were georeferenced by 8 students, and at Tulane each of 3,372 localities were georeferenced by one or two students. We also worked with experienced botanists (at FSU) and technicians (at Tulane) who served as experts and provided our “true” point.

Fig. 1. A particularly difficult and vague label from a plant specimen from the Robert K. Godfrey Herbarium, FSU. The town, Lake Stearns, Fla. has changed names since this plant was collected in 1927 (interestingly, it is now called Lake Placid). The imprecision of the locality information on the label, and therefore the clear difficulty in finding this exact location on a map, is compounded by the fact that the habitat provided, “High pine land” is likely now residential development. This label would be challenging to georeference for anyone except someone intimately familiar with, or willing to do substantial research on, the region and its history.

You can read all the nitty-gritty details of our methods and analysis in the open-access publication, but for now I’ll cut to the chase. The student georeferences were more accurate than the GEOLocate algorithm alone. This provides evidence that having a human in the workflow is still very important. The distance between the student points and expert point at FSU ranged from 0.18-37.08 km, with an overall mean distance of 4.62 km. At Tulane, the results were surprisingly similar—student points were 0.90-40.70 km from the expert (overall mean=8.30 km).

This level of accuracy from citizen scientists with minimal training is encouraging and depending on the down-stream use of the data, may be adequate. It is likely that a +/-5 km range of accuracy is sufficient for certain types of research—habitat ranges of mobile species, large-scale modeling, and studies using county-level presence data, to name a few. Botanical re-sampling studies, on the other hand, would still need expert-level georeferences in order to revisit individual plants.

There are many improvements that can be made to our workflow that would likely increase the accuracy of citizen scientist georeferences in future projects, and projects with a broader network of volunteers. For example, if a first pass can be made of labels to determine coarse-level locality information, then individuals from that region can be targeted to assist in the work. This may be possible with an OCR (optical character recognition) step added at the beginning. Enhancements to the GEOLocate platform, to citizen scientist training materials, and to post-process analyses, could also improve citizen scientist-generated georeferences.

Adding digital collection locality information to the world’s vast specimen collections will help make them more discoverable, useful, and valuable. Further, including citizen scientists in the process helps the general public gain an appreciation for specimens, improves STEM and geographical literacy, and builds our biodiversity community.

Read the full publication for more information about how we found consensus among student points at FSU, how these results fit into the bigger picture of citizen science projects and georeferencing research, and our recommendations for future directions.

Figure 2. A screenshot of GEOLocate from a search with the locality inputs “Apalachicola National Forest; United States of America; FL”, the region from which specimens were collected in the FSU study. The green dot in the north represents the GEOLocate algorithm’s (accurate) best guess at the locality, and the red dot in the south represent another possible location with a similar name, the town of Apalachicola, FL.